5 Key Capabilities That Define Powerful Agentic AI Platforms

Agent-driven AI is transforming how businesses operate—moving from simple automation to autonomous systems that think, act, and collaborate. But what actually defines a truly capable agentic AI platform? In CNCF’s upcoming on-demand session, Akka outlines the five essential capabilities that make agentic AI effective, scalable, and enterprise-ready. 📅 Event Details: Date: June 12Time: 12:00 AM…

Build a Gemini 2.5 Fullstack AI Agent on Replit in Minutes

Gemini 2.5 + LangGraph + Replit = AI Superpowers in Your Browser. Thanks to Paul Couvert’s tutorial, you can now deploy a fullstack AI agent—powered by Google’s Gemini 2.5—without ever leaving your browser. Whether you’re a developer or an AI enthusiast, this is one of the easiest ways to get started with conversational agents using…

AIOps, Automation & More: How AI is Changing IT Forever

The Transformative Role of AI in the IT Sector Artificial Intelligence (AI) is not just a buzzword anymore—it’s the backbone of modern IT innovation. As organizations strive for speed, scalability, security, and smarter decision-making, AI has become a critical enabler across every layer of the IT stack. From automating routine tasks to powering predictive insights,…

Google Launches Official Open-Source AI App for Local On-Device Inference

In a move that could significantly reshape the mobile AI landscape, Google has officially released an open-source app that allows users to run AI models locally on their smartphones—with no internet connection required. This powerful new tool is free, offline-capable, and fully open-source. Best of all, it pairs seamlessly with Google’s latest Gemma 3n open-source…

Introducing DuckLake: The SQL-Native Future of Data Lakes

In the ever-evolving world of data, the need for scalable, flexible, and high-performance infrastructure has never been more urgent. Today, we’re excited to introduce DuckLake — an integrated data lake and catalog format that sets a new standard for how organizations manage and analyze data at scale. DuckLake is a bold step forward in data…

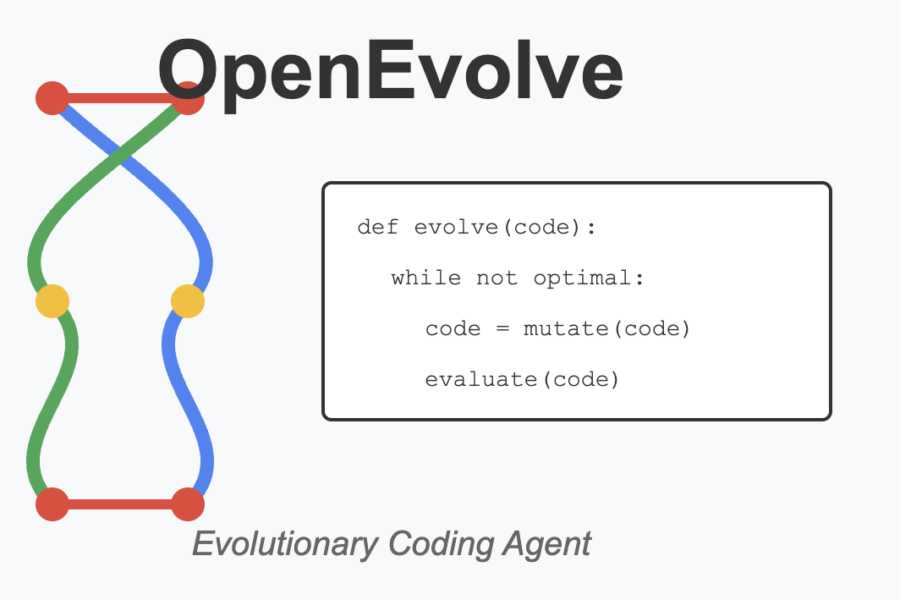

What Is OpenEvolve? Open-Source AlphaEvolve Alternative Explained

“Don’t just write code. Evolve it.” The age of intelligent software engineering has arrived — and OpenEvolve is at the cutting edge. This open-source project, inspired by Google’s AlphaEvolve, introduces a powerful way to evolve code using Large Language Models (LLMs) through iterative optimization. Unlike traditional code generators, OpenEvolve doesn’t just produce one answer. It…

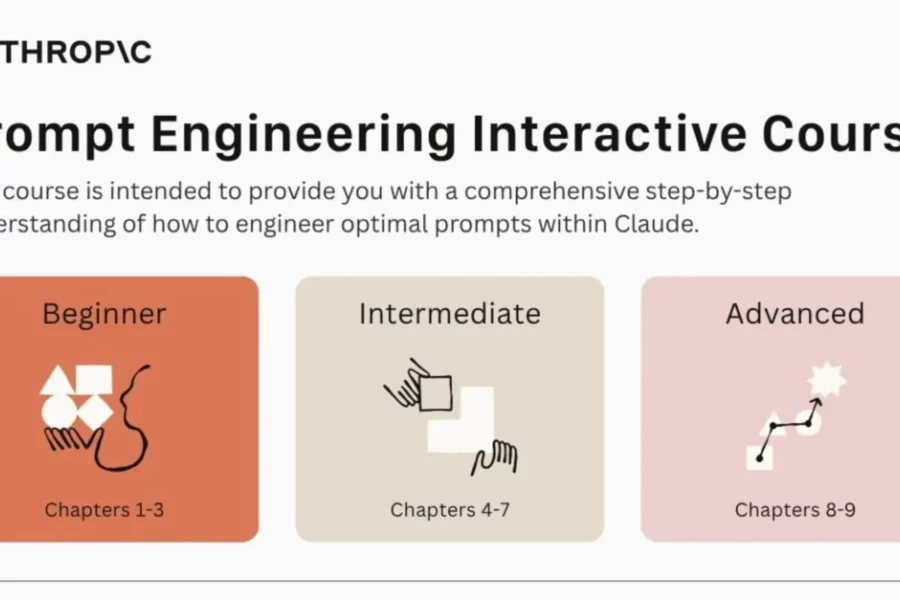

Become a Prompt Engineering Pro with Anthropic’s Free Interactive Guide

Prompt engineering is rapidly becoming a foundational skill in the world of artificial intelligence. With the rise of powerful language models like Claude, ChatGPT, and others, the ability to communicate effectively with these models through well-structured prompts is a game-changer. To help both beginners and professionals master this crucial skill, Anthropic has released a free,…

AI That Clicks, Scrolls, and Searches Like a Human

In the evolving landscape of artificial intelligence, Hugging Face’s SmolAgent Computer Agent introduces a significant leap in autonomous digital interaction. This experimental tool showcases how an AI agent can visually interpret, navigate, and operate websites in a human-like manner. What Is the Computer Agent Developed as part of the SmolAgents initiative, the Computer Agent is…

Fortify Your Containers: Meet Docker’s Enterprise-Grade Hardened Images

As container adoption grows across organizations of all sizes, so do concerns around security—especially when it comes to software supply chain threats. In response, Docker has introduced a powerful new offering: Docker Hardened Images, a catalog of enterprise-grade, security-hardened container images designed to simplify and strengthen container security from the inside out. Let’s dive into…

Proactive Secrets Management, Now Made Simple with HCP Vault Radar GA

In today’s fast-paced digital landscape, managing secrets like API keys, tokens, and passwords has become a mission-critical priority for organizations. With the rise of DevOps practices, hybrid environments, and cloud-native applications, secrets can easily become scattered across source code, cloud services, and collaboration platforms—making them vulnerable to exposure. To tackle this growing challenge, HashiCorp has…

Say Hello to Observability-as-Code: Grafana 12 Leads the Way

The world of observability just took a major leap forward. With the release of Grafana 12, Grafana Labs is not just rolling out new features—they’re reshaping how teams visualize, manage, and automate their observability data. This version brings cutting-edge capabilities like Git Sync, dynamic dashboards, enhanced Drilldown apps, and a Cloud Migration Assistant, all aimed…

Beyond Chatbots: Inside Cisco’s Intelligent Engineering Assistant

In the high-speed world of modern software engineering, innovation often gets bottlenecked by repetitive, time-consuming operational tasks. What if there was an intelligent teammate who could manage these tasks for you—faster, more consistently, and without ever taking a break? Enter JARVIS, Cisco’s AI-powered platform engineering assistant developed by Outshift, its innovation arm. Named after Iron…