NVIDIA’s CUDA platform continues to dominate GPU-accelerated computing, powering everything from AI training and inference to scientific simulations and real-time graphics. With the release of CUDA Toolkit 13.1 in late 2025, the ecosystem has seen significant advancements, including the groundbreaking CUDA Tile (cuTile Python) for higher-level tile-based programming. However, core advanced features like Unified Memory, CUDA Graphs, and Cooperative Groups remain essential for squeezing maximum performance from modern GPUs like Blackwell and Hopper architectures.

These tools help developers manage complexity, reduce overhead, and scale across multi-GPU setups. Whether you’re optimizing legacy code or building next-gen applications, mastering them is key to leveling up your GPU game.

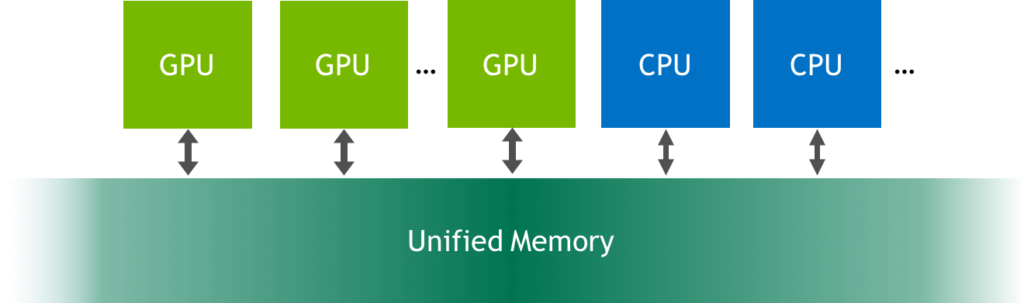

Unified Memory: Seamless Host-Device Data Sharing

Explicit memory transfers with cudaMemcpy can bottleneck applications. Unified Memory creates a single virtual address space accessible from both CPU and GPU, letting the runtime handle migrations via page faults.

Benefits include:

- Simplified porting of CPU code to GPU.

- Oversubscription (using more memory than physically on the GPU).

- Hints and prefetching for fine-tuned performance (e.g., cudaMemPrefetchAsync).

In multi-GPU scenarios, Unified Memory supports coherent access with advisories for optimal placement.

CUDA Graphs: Eliminate Launch Overhead

Repeated kernel launches introduce significant driver overhead in iterative workloads. CUDA Graphs capture a sequence of operations—kernels, memcopies, dependencies—into a single graph launched with one call.

Ideal for deep learning inference or simulations, Graphs deliver 2-10x speedups. Updates and conditional nodes add flexibility, while integration with streams enables concurrency.

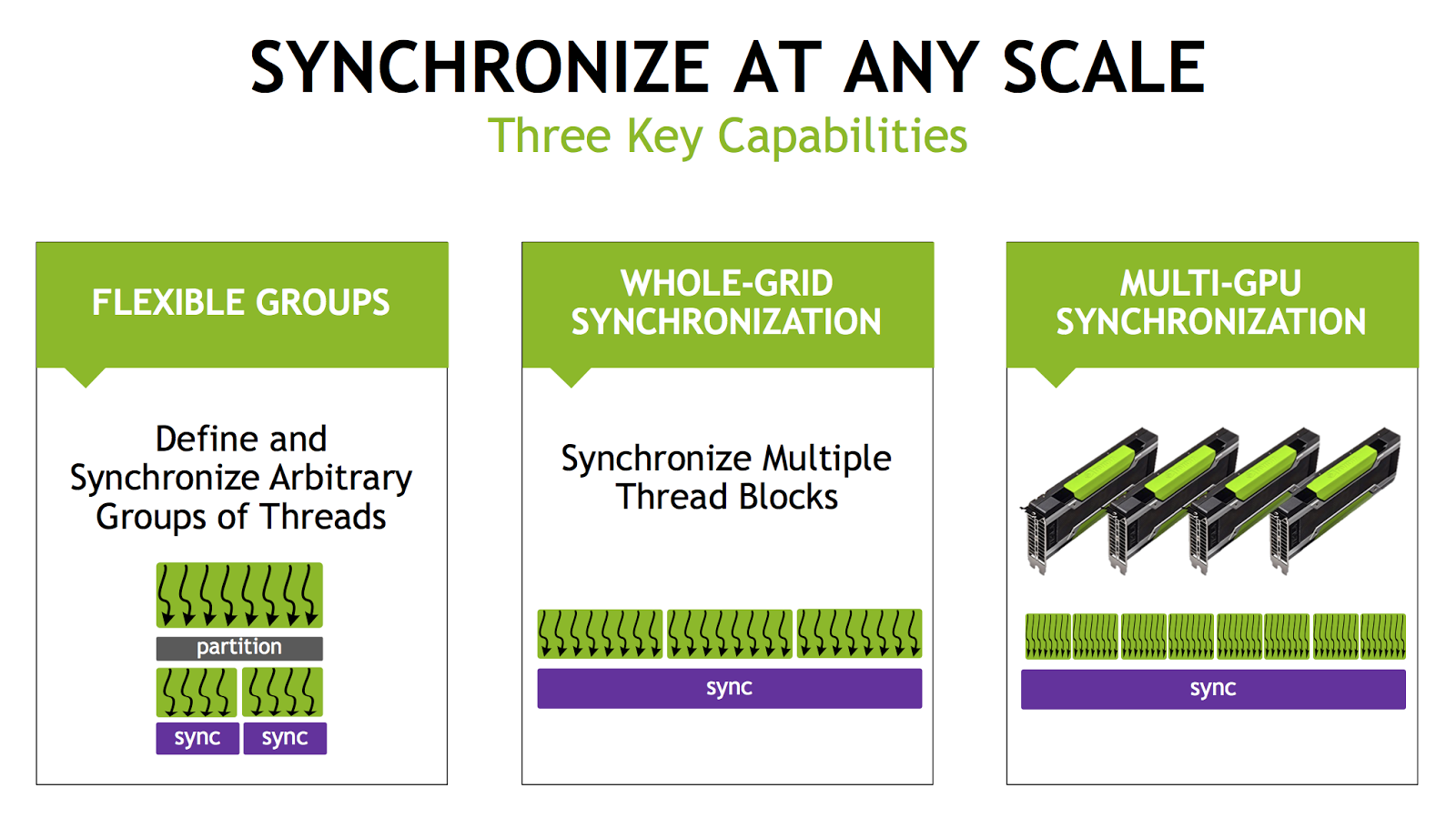

Cooperative Groups: Granular Thread Synchronization

Traditional blocks limit sync scope. Cooperative Groups provide programmable thread collectives for synchronization beyond blocks—even grid-wide or multi-GPU.

Use cases:

- Irregular algorithms (e.g., graphs, sparse matrices).

- Async operations like memcpy_async.

- Multi-block or multi-device cooperation.

Looking Ahead: CUDA Tile and Beyond

While these classics endure, keep an eye on cuTile Python in CUDA 13.1—a Python DSL for tile-based kernels that abstracts thread management for Blackwell GPUs and beyond.

Why Invest in These Features Now?

As GPUs advance (Blackwell’s massive scale), Unified Memory, Graphs, and Cooperative Groups maximize occupancy, minimize latency, and enable scalable multi-GPU code. Profile with Nsight Systems/Compute for quick wins.

Dive into the latest CUDA Programming Guide and experiment—these tools will transform your GPU workflows in 2026 and beyond.

Follow us for more Updates