Managing a Kubernetes Load Balancer has always been challenging. In the past, setting it up involved a time-consuming process of submitting tickets and collaborating between network and Linux engineers. Now, with cloud technology, on-demand load balancer services are available instantly for Kubernetes, simplifying tasks for DevOps engineers. However, replicating this ease of use in on-premises private cloud setups remains complex.

What user experience are cloud practitioners expecting from Layer-4 load balancers?

Public cloud providers offer significant convenience by automatically provisioning and setting up the necessary services for applications.

All leading public cloud providers provide on-demand load balancer services for exposing Kubernetes applications to the public internet. Alternatively, if you’re using an Ingress controller, it can utilize on-demand load balancers to expose its public TCP port to the internet.

How can we get this cloud-like experience in a private cloud, on-prem?

A modern Layer-4 Load Balancer (L4LB) minimal expectations:

- Native integration with Kubernetes

- Immediate, on-demand provisioning

- Manageable using: K8s CRDs, Terraform, RestAPI, intuitive GUI

- High Availability

- Horizontally scalable

- TCP/HTTP health checks

- Easy to install & use (L4LB is not rocket science)

A modern Layer-4 Load Balancer (L4LB) nice-to-have expectations:

- Run on commodity hardware

- DPDK / SmartNIC HW acceleration support

- Based on well known open-source ecosystem & standards protocols (no proprietary black box things)

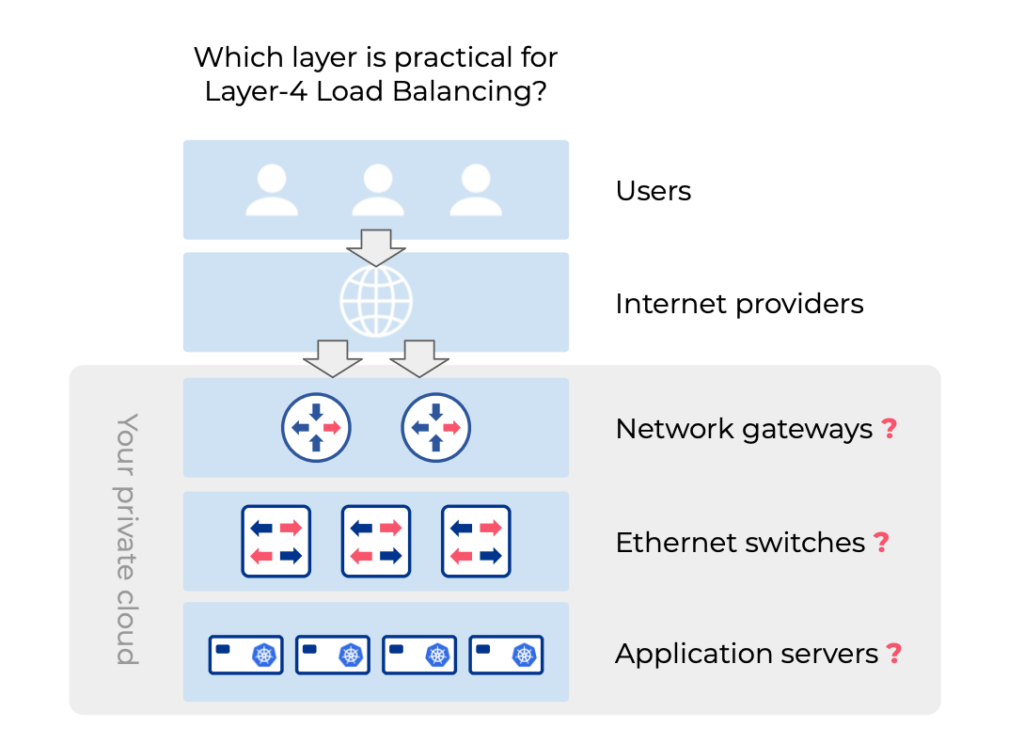

Where is the most practical place for the Layer-4 load balancer function in my architecture?

Ethernet switches can load balance traffic. But is this suitable for Kubernetes?

Ethernet switches primarily facilitate high-performance communication among application servers within your private cloud. Modern switches employ hashing algorithms to balance network traffic based on IP addresses and TCP/UDP port numbers, commonly used for Layer-2 (e.g., LAG interface balancing) and Layer-3 (e.g., ECMP across switch fabric) load balancing. ECMP-based Layer-3 load balancing is also utilized for server traffic load balancing, particularly in high-performance content delivery architectures like ROH (routing-to-host). While this method offers ultra-high performance, it lacks the ability to distinguish between different TCP ports, necessitating a dedicated public IP address for each application and TCP port, leading to resource wastage.

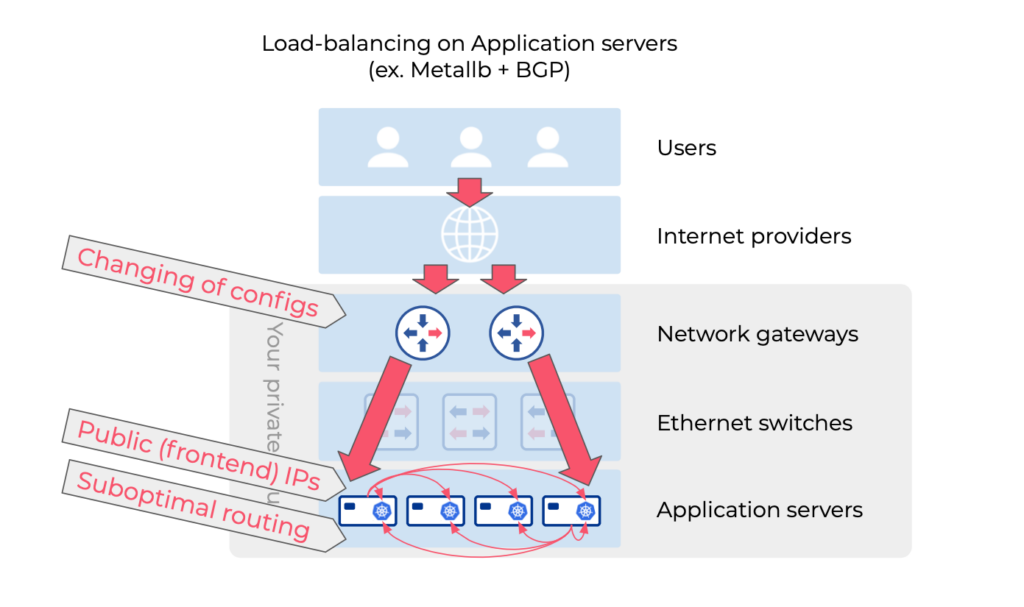

Is it practical to run a Layer-4 load balancer right on the application servers?

Various applications like Metallb, KubeVIP, or nodeport with kubeproxy or Cilium can handle Layer-4 load balancing on Kubernetes nodes, offering simplicity. However, routing traffic from the network gateway to these load balancers presents a challenge. Static routing can bottleneck traffic, while dynamic routing protocols like BGP with Metallb improve high availability by distributing the public IP address across all Kubernetes nodes. This approach, though, may lead to suboptimal routing.

BOTTOM LINE: Application servers are not the best place to perform load balancing.

- Potentially suboptimal routing

- Hosting a public IP address on kubernetes node (security)

- Requires changing of BGP configurations on the routers/switches every time you add/move/remove a kubernetes node.

- Conflicting with Calico CNI

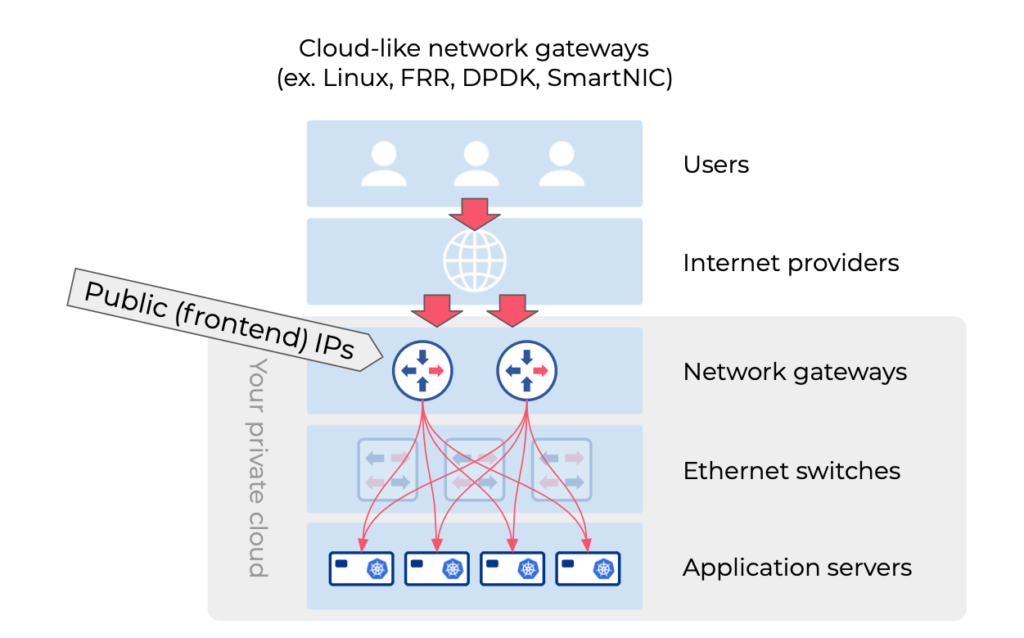

Why are network gateways great places for Layer-4 load balancing functions?

Cloud-like Load Balancers for Kubernetes on-premises and bare metal environments have become essential. Traditionally, managing Kubernetes Load Balancers posed challenges for network operations teams. However, modern solutions such as Metallb, KubeVIP, or nodeport with kubeproxy or Cilium simplify this process. Yet, routing traffic from the network gateway to these load balancers remains a hurdle. Dynamic routing protocols like BGP with Metallb offer high availability, but may lead to suboptimal routing. Consequently, while Layer-4 load balancing can run on Kubernetes nodes, directing traffic efficiently poses a challenge. It’s more practical to implement Layer-4 load balancing functions at network gateways due to their central role in packet routing and minimal overhead.

What are the options (suitable for Kubernetes)?

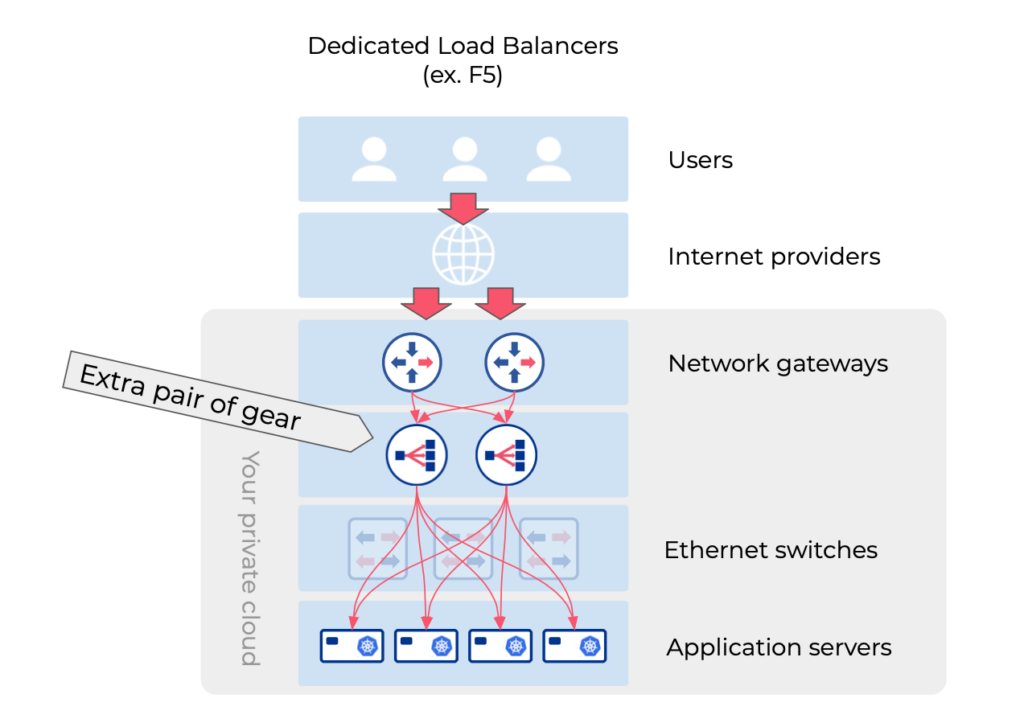

Is it excessive to utilize specialized load balancer appliances (e.g., F5) for Layer-4 Kubernetes Load Balancing?

While some modern load balancer appliances integrate with Kubernetes, they typically offer a range of advanced and costly load balancing services, with Layer-4 being just a fraction. If your aim is solely to expose Kubernetes applications or route traffic into Kubernetes, employing a specialized load balancer is unnecessary. Moreover, many of these appliances lack essential gateway functionalities like routing or NAT, necessitating a separate router appliance. Conversely, most routers do not offer Layer-4 load balancing functions.

Please explore the provided reference for further details.