Many developers mistakenly believe that a concurrent solution will always be faster than a sequential one, but this is not always the case. The performance of a solution depends on various factors, such as the efficiency of the concurrency structure, the parts that can be processed in parallel, and the level of contention among the computation units. It is important to remember these fundamental principles of concurrency in Go. As an example, it is possible for a concurrent solution to be slower than a sequential one, depending on the specific circumstances.

Go Scheduling

To execute multiple actions simultaneously, it is possible to use multiple threads. These threads can be concurrent, meaning that they start, run, and complete at overlapping times, or they can be parallel, meaning that the same task is being executed multiple times simultaneously.

The operating system is responsible for optimizing the scheduling of these threads, ensuring that

- All the threads can consume CPU cycles without being starved for too much time.

- The workload is distributed as evenly as possible among the different CPU

As Go developers, we do not have direct control over threads, but we can create goroutines, which are lightweight threads of execution at the application level. While the operating system is responsible for context-switching OS threads on and off the CPU cores, the Go runtime is responsible for context switching goroutines on and off OS threads. Goroutines have a smaller memory footprint compared to OS threads, with a default size of 2KB in Go 1.4, compared to 2MB for Linux/x86-32. This smaller size allows for faster context-switching, which can improve the overall performance of the program.

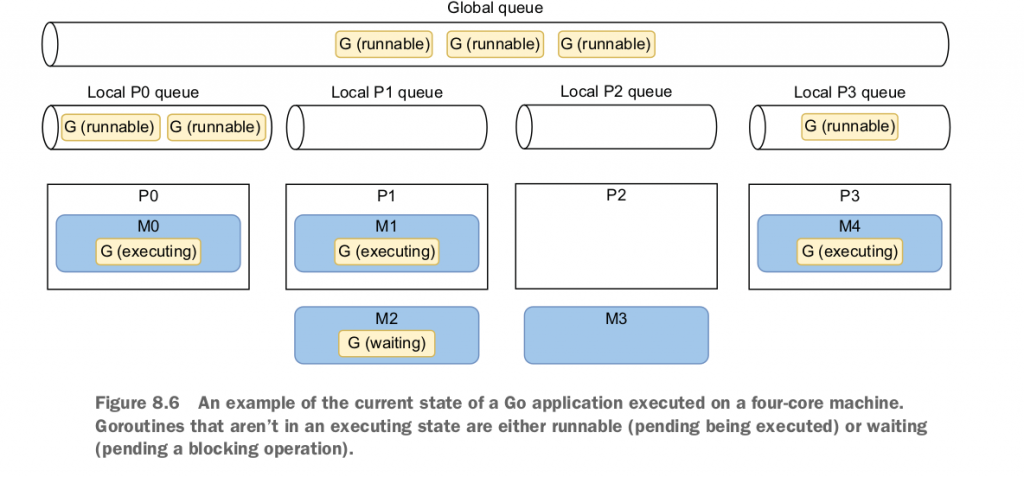

Let’s now discuss how the Go scheduler works to overview how goroutines are handled. Internally, the Go scheduler uses the following terminology:-

G—Goroutine

M—OS thread (stands for machine)

P—CPU core (stands for processor)

A goroutine has a simpler lifecycle than an OS thread. It can be doing one of the following:

- Executing—The goroutine is scheduled on an M and executing its instructions.

- Runnable—The goroutine is waiting to be in an executing state.

- Waiting—The goroutine is stopped and pending something completing, such as a system call or a synchronization operation (such as acquiring a mutex).

When a goroutine is created but cannot be immediately executed because all available processing resources are already in use, it is placed in a queue by the Go runtime. There are two types of queues in the Go runtime: a local queue for each processor (P) and a global queue that is shared among all processors. In this way, the Go runtime is able to manage the execution of goroutines and ensure that they are executed in a timely manner, even when there are more goroutines than available processing resources.

P0, P1, and P3 are currently busy executing Go runtime threads. But P2 is presently idle as M3 is switched off P2, and there’s no goroutine to be executed. This isn’t a good situation because six runnable goroutines are pending being executed, some in the global queue and some in other local queues. How will the Go runtime handle this situation? Here’s the scheduling implementation in pseudocode :

runtime.schedule() {

// Only 1/61 of the time, check the global runnable queue for a G.

// If not found, check the local queue.

// If not found,

// Try to steal from other Ps.

// If not, check the global runnable queue.

// If not found, poll network.

}The Go scheduler is designed to efficiently distribute work among available processors using a technique called “work stealing”. Every sixty-first execution, the scheduler will check for available goroutines in the global queue, and if none are found, it will look in its local queue. If both the global and local queues are empty, the scheduler may “steal” some goroutines from other local queues in order to keep all processors busy. This helps to ensure that the workload is evenly distributed among the available resources, and that underutilized processors are able to contribute to the overall execution of the program.

Parallel merge sort

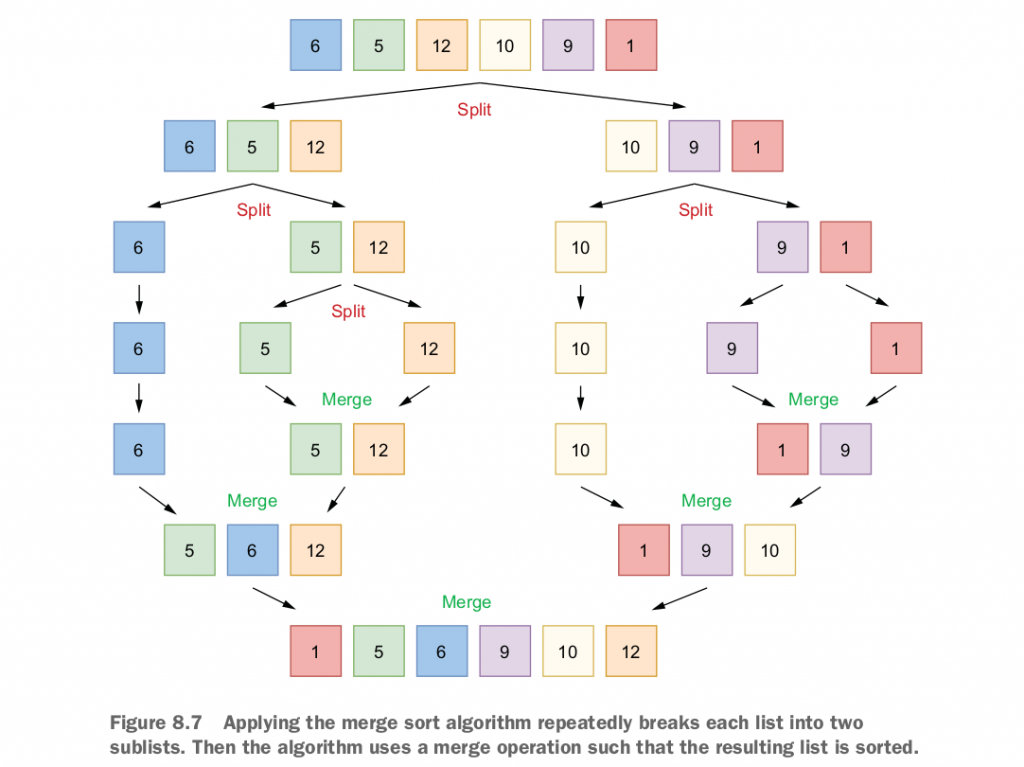

The merge sort algorithm is a sorting algorithm that works by breaking a list down into smaller sublists until each sublist consists of a single element, and then merging these sublists back together in a sorted order. This is achieved through a series of split and merge operations, as illustrated in figure 8.7. In this example, we will review the basic principles of the merge sort algorithm and then implement a parallel version. It is important to note that the goal is not to create the most efficient implementation, but rather to use this as a concrete example to demonstrate that concurrency is not always faster.

Here is the sequential implementation of this algorithm. We don’t include all of the code as it’s not the main point of this section:

func sequentialMergesort(s []int) {

if len(s) <= 1 {

return

}

middle := len(s) / 2

sequentialMergesort(s[:middle])

sequentialMergesort(s[middle:])

merge(s, middle)

}

func merge(s []int, middle int) {

// ...

}The structure of the merge sort algorithm makes it well-suited for concurrency, as each sequentialMergesort operation operates on a separate set of data that does not need to be fully copied. This allows us to easily distribute the workload among CPU cores by running each sequentialMergesort operation in a separate goroutine. In this case, we will write a parallel implementation of the algorithm by taking advantage of this property.

func parallelMergesortV1(s []int) {

if len(s) <= 1 {

return

}

middle := len(s) / 2

var wg sync.WaitGroup

wg.Add(2)

go func() {

defer wg.Done()

parallelMergesortV1(s[:middle])

}()

go func() {

defer wg.Done()

parallelMergesortV1(s[middle:])

}()

wg.Wait()

merge(s, middle)

}In this version, each half of the workload is handled in a separate goroutine. The parent goroutine waits for both parts by using sync.WaitGroup . Hence, we call the Wait method before the merge operation.

If we run a benchmark to compare this version against the sequential one, the parallel version should be faster, correct? Let’s run it on a four-core machine with 10,000 elements:

Benchmark_sequentialMergesort-4 2278993555 ns/op

Benchmark_parallelMergesortV1-4 17525998709 ns/opHow is it possible that a parallel version that distributes a workload across four cores is slower than a sequential version running on a single machine? Let’s analyze the problem.

When implementing the merge sort algorithm in parallel, we can divide the workload among multiple goroutines by recursively splitting the input slice into smaller and smaller pieces. For example, if we have a slice with 1024 elements, the parent goroutine would spawn two child goroutines to handle slices of 512 elements each. These child goroutines would in turn spawn their own child goroutines to handle slices of 256 elements, and so on, until we reach slices containing a single element. This allows us to take advantage of multiple processors or cores to parallelize the computation and improve the performance of the algorithm.

When the workload to be parallelized is very small, the overhead of creating and scheduling multiple goroutines may be greater than the benefit of distributing the work among multiple cores. In this case, it may be more efficient to simply perform the computation directly in the current goroutine, rather than creating additional goroutines. While goroutines are generally lightweight and fast to start, there are cases where the workload is too small to justify the overhead of creating additional goroutines. In such cases, a concurrent or parallel implementation may not be faster than a sequential one.

To improve the efficiency of our parallel implementation, we can use a threshold to determine when it is appropriate to create a new goroutine. If the number of elements in a slice is below this threshold, we can handle the computation sequentially in the current goroutine. Otherwise, we can create a new goroutine to handle the computation in parallel. This allows us to avoid the overhead of creating and scheduling goroutines for small workloads, while still taking advantage of parallelization for larger ones. Here is a revised version of the algorithm that uses this approach.

const max = 2048

func parallelMergesortV2(s []int) {

if len(s) <= 1 {

return

}

if len(s) <= max {

sequentialMergesort(s)

} else {

middle := len(s) / 2

var wg sync.WaitGroup

wg.Add(2)

go func() {

defer wg.Done()

parallelMergesortV2(s[:middle])

}()

go func() {

defer wg.Done()172

parallelMergesortV2(s[middle:])

}()

wg.Wait()

merge(s, middle)

}

}Does this approach impact the result? Yes, it does:

Benchmark_sequentialMergesort-4 2278993555 ns/op

Benchmark_parallelMergesortV1-4 17525998709 ns/op

Benchmark_parallelMergesortV2-4 1313010260 ns/opOur v2 parallel implementation is more than 40% faster than the sequential one, thanks to this idea of defining a threshold to indicate when parallel should be more efficient than sequential.

Conclusion

We illustrated that concurrency isn’t always necessarily faster. As we have seen, spinning up goroutines to handle minimal workloads (merging only a small set of elements) demolishes the benefit we could get from parallelism. When attempting to optimize the performance of a program, it is often helpful to start with a simple, sequential version and then gradually build upon it, using tools such as profiling and benchmarks to measure the impact of each change. This allows us to determine whether a parallel version will actually be faster, rather than simply assuming that concurrency will always be beneficial. Inaccurate benchmarks or a lack of proper diagnostic tools can lead to incorrect assumptions about the performance of a program, and it is important to use these tools to ensure that the effort invested in optimizing the program is worthwhile.

Refrences:

- 100 Go Mistakes and how to avoid them, Teiva Harsanyi, Manning Publications Co