An External Logging feature is a feature that is used to collect and store log data from various sources, such as servers, applications, and devices. Logging are typically designed to be deployed in a container orchestration platform such as Kubernetes and to work in concert with other tools such as log shippers, log aggregators, and log analyzers to provide a complete logging solution.

Why External Logging?

All the system has their own in-built logging system. The logging data are stored inside the device itself and can be retrieved if required. But the condition comes when the instances are reset and formatted to their initial blank conditions. At this moment all the logs are swept off and cannot be retrieved for the last error or any other logs for debugging purpose. So to overcome this condition the External Logging Feature becomes a very helpful tool to debug and troubleshoot the actual error that had happened in the past moment to build the robust system.

External Logging typically have the following features:

- Collect log data from various sources and make it available for monitoring and analysis.

- Store log data in a central location, such as a database or a log management platform.

- Provide a way to filter, search, and query log data.

- Automate the process of log data collection, storage, and analysis.

- Scale horizontally to handle large volumes of log data.

Logging System in more detail

External Logging is a feature in 01cloud that is used for collecting and managing logs in a Kubernetes cluster. The logging is built on top of Fluentd, an open-source log collector and forwarder, and Elasticsearch, a popular open-source search, and analytics engine. The external logging helps you bundle the logging information of your applications.

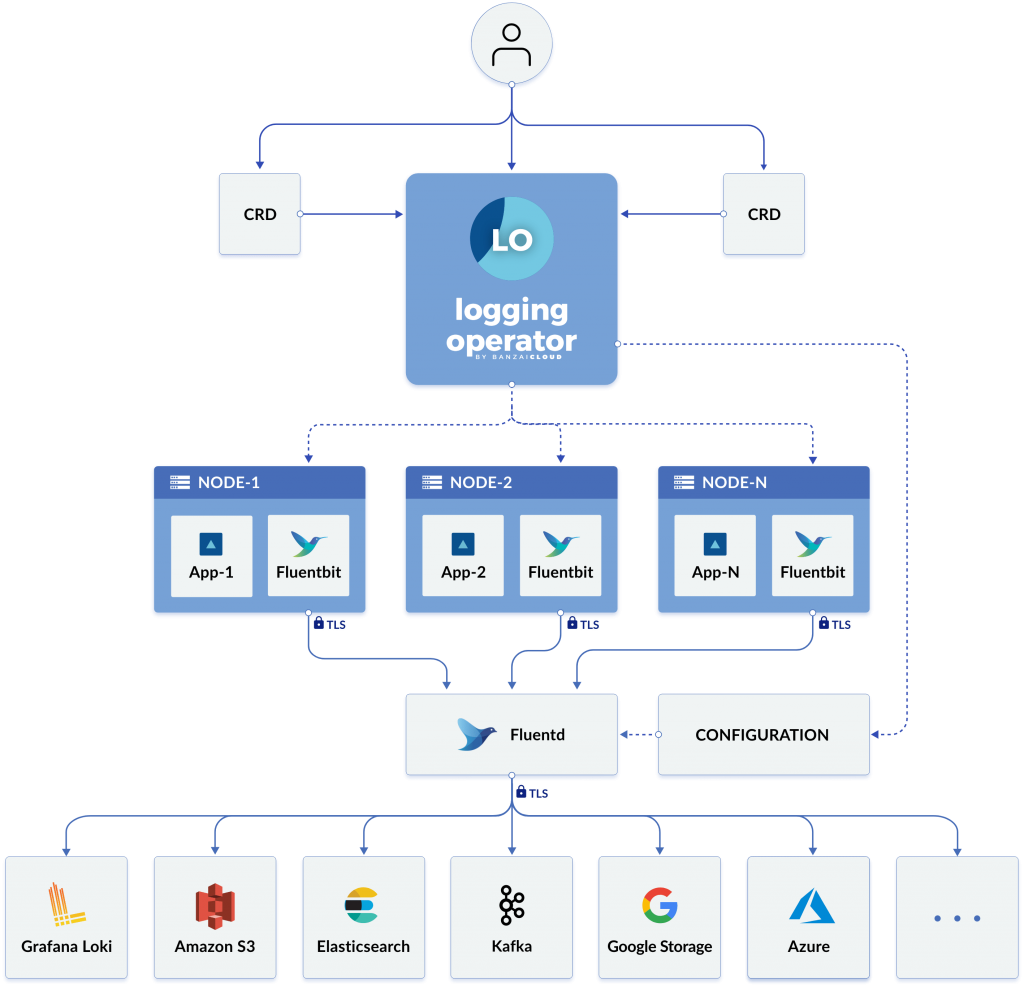

Architecture

The architecture follows the outputs definitions (destinations for log messages to be sent such as Elasticsearch, Loki, GCP Bucket , Amazon S3 bucket or Amazon Cloudwatch), and flows that use filters and selectors to route log messages to the appropriate outputs.

The external logging is divided into the following modules:

- Logging:- resource definition for the core logging infrastructure such as which namespaces to monitor and the underlying fluentbit/fluentd configurations

- Flow/Clusterflow:- defines the filters (e.g. parsers, tags, label selectors) and output references. Flow is a namespaced resource, whereas Clusterflow applies to the entire cluster.

- Output/Clusteroutput:- controls where the logs are sent (e.g. Amazon Cloudwatch, S3, Kinesis, Datadog, Elasticsearch, Grafana Loki, Kafka, NewRelic, Splunk, SumoLogic, Syslog)

01Cloud is a PaaS (Platform as a Service) provider that offers a comprehensive solution for developing and deploying various types of applications, from simple cloud-based apps to enterprise-level tools. Our platform is built on the Kubernetes architecture and prioritizes security, providing a consistent and standardized environment for organizational projects

Purpose of external logging in 01cloud

The main purpose of implementing a logging in 01cloud is to make user easy to collect the logs of their specific environment and also to store and save logs for future purposes. Logging also provide features such as filtering, aggregation, and alerting, making it easier to identify and troubleshoot issues within a cluster. So with the help of a logging the client can see and store the logs of their specific environment in their preferred destination such as Loki, amazon s3, elastic search, etc. Overall, the purpose of a logging is to make log management and analysis more efficient and effective within a Kubernetes environment.

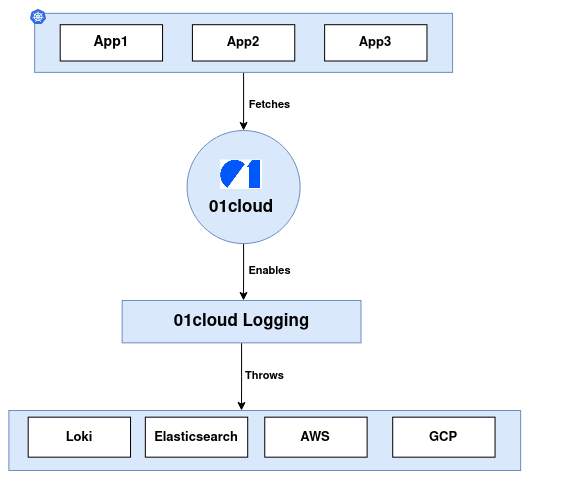

Architecture

01cloud collects the logs from the application and passes the logs to the preferred destination.Here, user creates the environment and enables the logging.After the logging is enabled the logs of specific application is passed to 01cloud logging. Here the logs are configured and passed to the destination that is provided by the user where the logs from specific application is stored and managed.

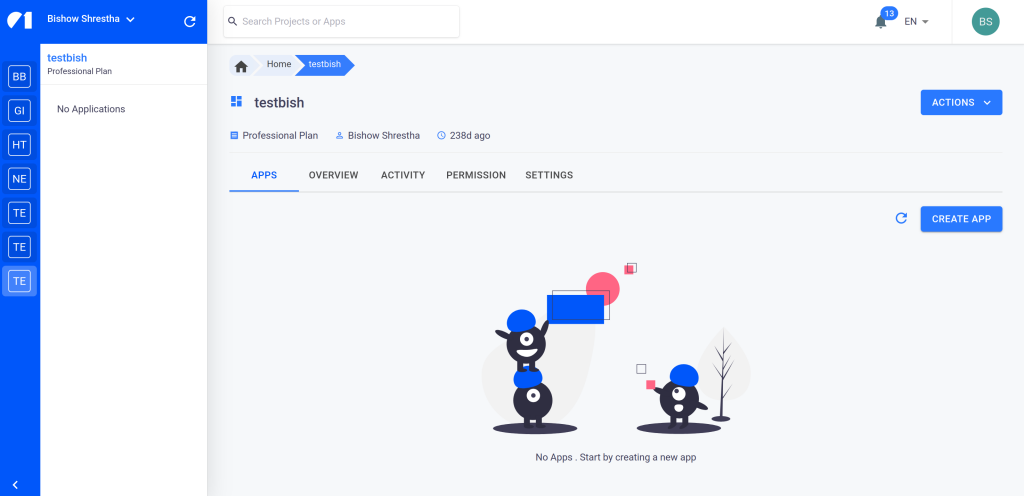

External Logging setup for 01cloud

- Access the 01cloud dashboard and create a new project. Within the project, create a new application.

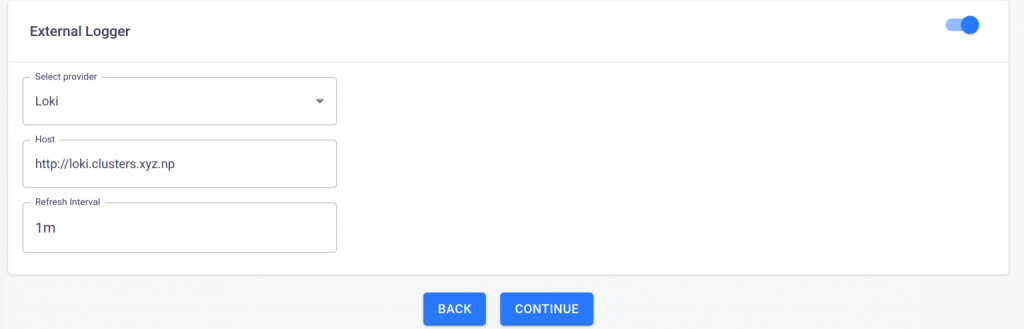

- Once the application has been created, create the environment and set up the logging. In the custom installation tab, there is an option for external logging setup.

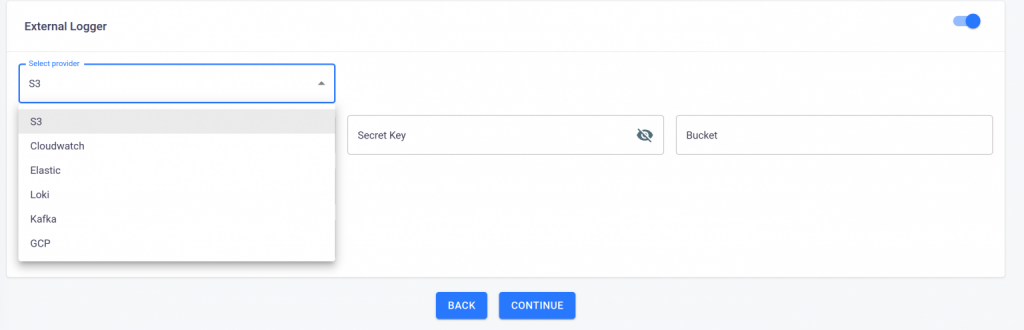

- The logging currently supports integration with various services such as S3 bucket, CloudWatch, Elastic, Loki, Kafka, and GCP bucket.

- Choose the Loki provider for the logging and enter the host URL.

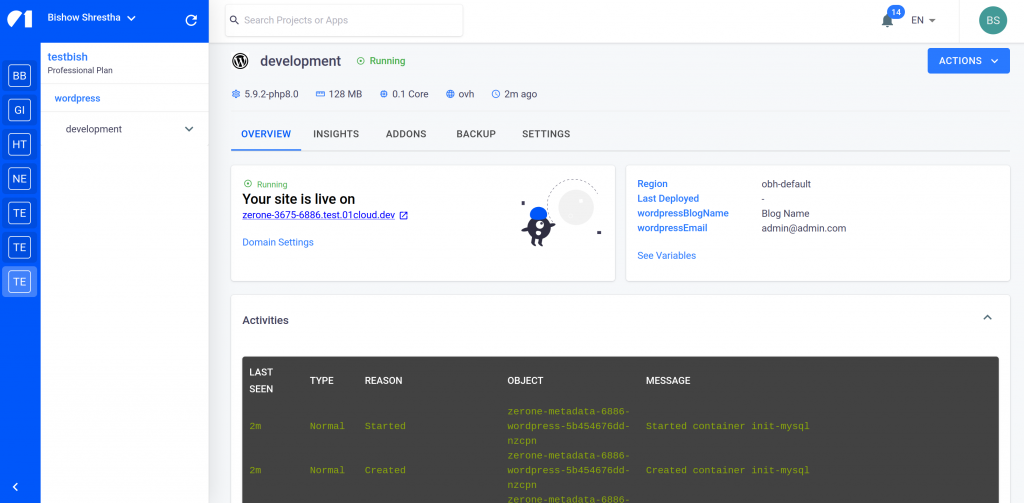

- Now create an environment after selecting the Loki provider. Once the environment status is running, the logs for that specific environment is visible in the Grafana dashboard.

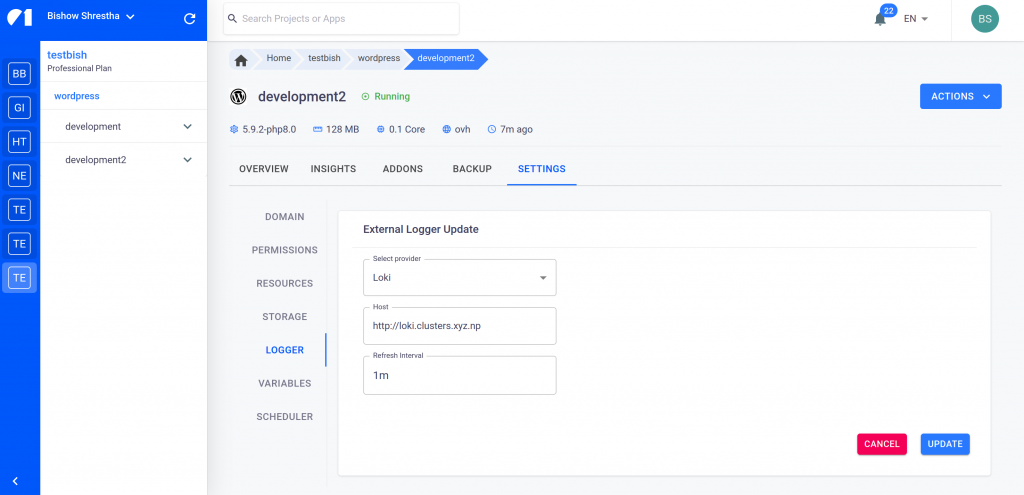

- The logging credentials can also be edited by navigating to the settings tab, and then the logging tab.

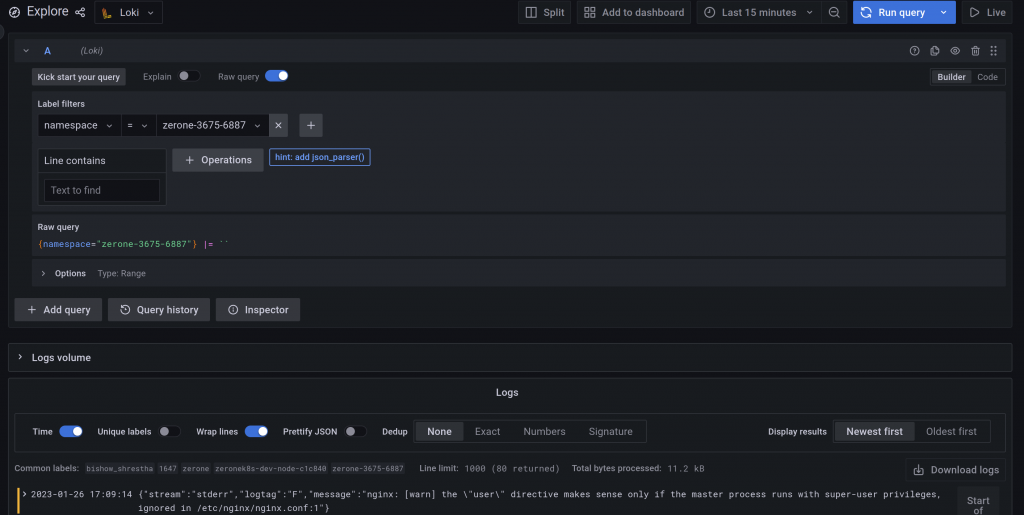

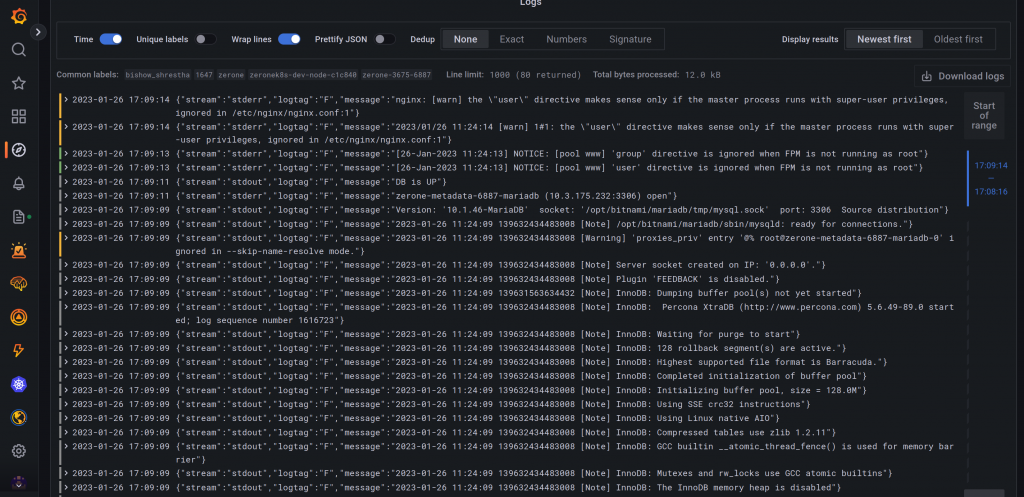

- Access the Grafana dashboard to view the logs for the selected environment.

- This is the log for the user’s WordPress application.

Advantage of external logging in 01cloud

- With the external logging, the logs of the specific environment are stored and saved on the preferred destination.

- The logs are saved to a preferred destination, which makes it convenient for users to access and review them as needed

- It is possible to keep track of and record specific events and errors that occur during the operation of the program

- Multiple output support(store the same logs in multiple storage: S3, GCS, ES, Loki and more…)

- Help for debugging and troubleshooting purposes

- Logging also provide features such as filtering, aggregation, and alerting, making it easier to identify and troubleshoot issues within a cluster

Logging Setup for various provider in 01cloud

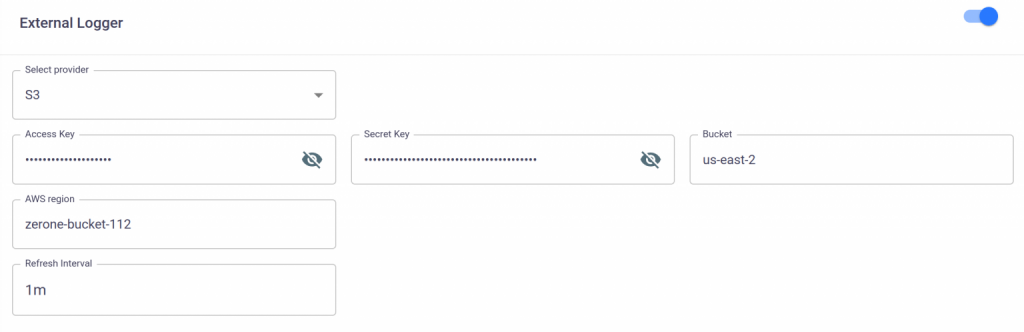

AWS

To utilize Amazon Web Services (AWS), the user will need the access key, secret key, the name of the bucket, and the AWS region. The logs are stored within AWS in a path named “logging”.

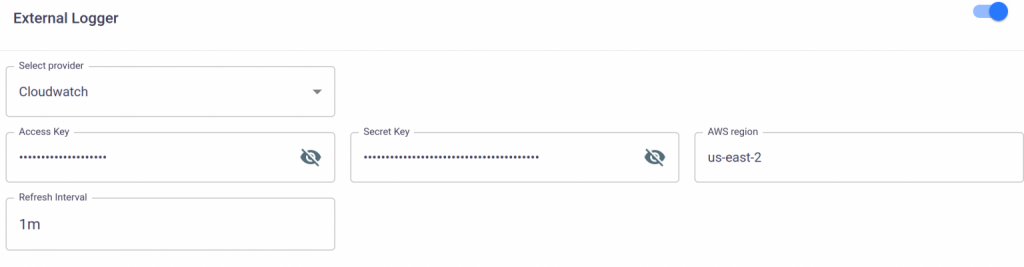

CloudWatch

To access Amazon CloudWatch logs, the user will require the access key, secret key, and the AWS region. The logs are stored within the CloudWatch environment in a log group named “operator-log-group”.

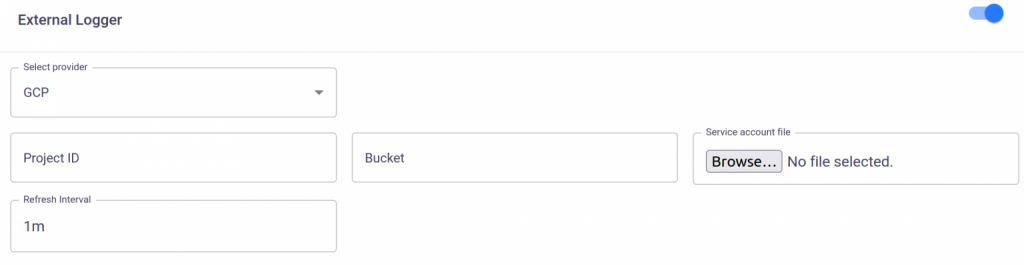

GCP

To configure Google Cloud Platform (GCP), a user will need the project ID, the name of the bucket, and the service account file. The logs are stored within the GCP environment in a path named “logs”.

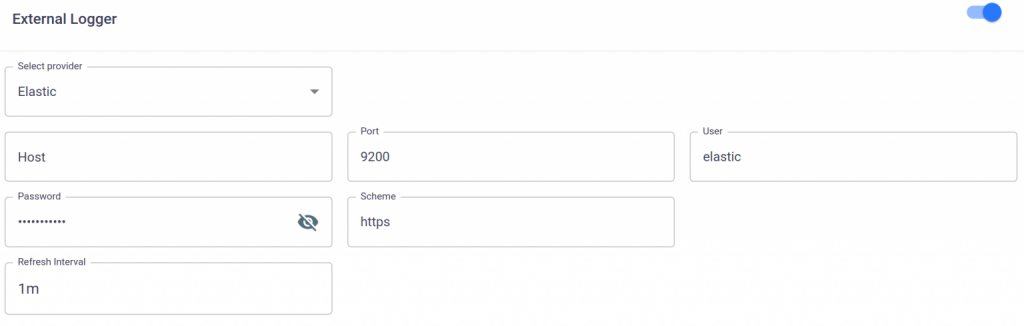

Elastic

The host URL, port, username, password, and scheme for Elastic can be used to log messages which will be stored in an index known as “fluentd”. To view these logs, a user can create an index pattern in Elastic that includes this index, for example “fluentd*”.

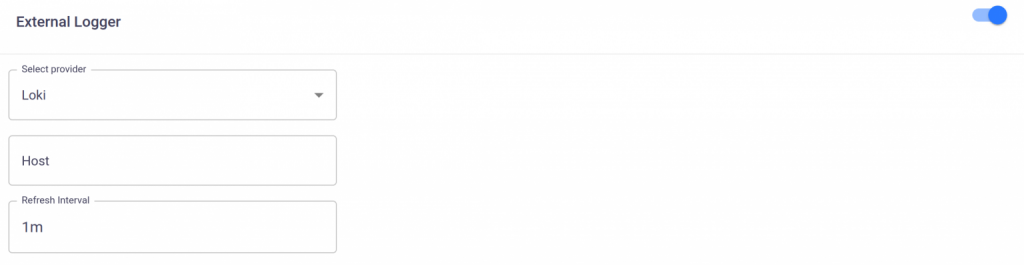

Loki

The Loki log data can be accessed through the URL of the host and viewed within the Grafana dashboard under a data source named “Loki”.

Conclusion

The external logging feature has proven to be extremely beneficial in organizing and managing the logging infrastructure across multiple clusters and teams with varying logging requirements. External logging simplifies the process of setting up logs as it handles all aspects of log management. With this implementation, logs for specific applications can be stored in various locations, providing added convenience for debugging and troubleshooting. This allows for more efficient log management and helps with identifying and resolving any issues that may arise.